From chatbots to generative AI to autonomous vehicles, AI has taken the world by storm. And although the applications of artificial intelligence in HR are still somewhat new, demand keeps increasing.

During a recent conversation, our resident AI expert, Rabih Zbib, spoke about the current and future state of this technology and what it brings to our developments at Avature.

With a Masters in Science and a Ph.D. from MIT, Rabih is the Director of Natural Language Processing and Machine Learning at Avature. He works on applying these branches of artificial intelligence to solve challenges in recruiting and talent management.

Of course, we wanted to pick his brains to understand the most critical elements when it comes to artificial intelligence in HR.

Setting the Right Expectations in Your AI Strategy

One of the main issues when it comes to AI for HR is how to implement it, with many companies and vendors struggling with a constant “expectation vs. reality” dilemma.

There is an expectation that AI is the magic bullet, but when it comes to the nitty-gritty, setting the expectations is the challenge, and it’s the challenge for the sector in general.”

-Rabih Zbib. Director of Natural Language Processing & Machine Learning at Avature.

What Can Artificial Intelligence in HR Do For You?

Picking up on this, Rabih describes these expectations as two-fold:

- What is realistic in terms of the current capability of the technology? What can it do? What can’t it do?

With HR teams already using or looking to leverage popular AI technologies like ChatGPT for many day-to-day tasks, it may seem that we’re in a moment where anything is possible. However, we must remember to have realistic, actionable expectations when it comes to implementing strategies involving technology, as while it can enhance processes, it won’t solve any issues by itself.

Regarding Avature, Rabih highlights the importance of developing a partnership and feedback loop with the customer, noting that it’s not just about what the technology can do. He and his team are dedicated to listening to customer’s real pain points, enabling a way in which all this information can be incorporated into a technical approach.

What makes this even more complex is that the capabilities of artificial intelligence in HR are advancing fast.

What Should AI Do For You?

- What should we allow the technology to do? What shouldn’t it do depending on the application sector?

“We may be comfortable letting it recommend which brand of sneakers we should be buying, but are we comfortable with the same technology recommending who we should hire? There’s a lot of nuance there.”

-Rabih Zbib. Director of Natural Language Processing & Machine Learning at Avature.

In other words, he highlights the importance of an ethical application of artificial intelligence in HR. You’re probably starting to think about bias here – a critical consideration that we’ll explore in detail below. But there’s also an emphasis on the value of maintaining the human aspect.

Let’s take hiring as an example. With today’s technology, you could completely eliminate interaction between applicants and the recruiter, leveraging automated screening, knock-out questions and AI-powered scoring to identify the best candidate based on your pre-defined criteria.

With everything set up correctly, you could even automate the offer process and hire someone without even minimal interaction. Efficient, but in practice, what impression does this dehumanized approach give to candidates and, what human insight that is hard to quantify will be lost?

An ethical application of artificial intelligence should take into account people as well as data, or else your talent process is likely to lead to a depersonalized approach that will only center on efficiency and affect the candidate or employee experience.

Key Considerations When Applying Artificial Intelligence in HR

Data and How to Use It

“Your technology is as good as your data” is a critical mantra in the fields of artificial intelligence and machine learning. Data is the key component in building a machine-learning strategy; it can definitely make or break it.

Not every vendor is able to offer experience and wealth in data or even transparency when it comes to how it was gathered. And this should be one of your key concerns when evaluating who to work with.

Many start-ups and vendors are using AI as a selling point to address niche ideas and point challenges, offering just one solution to one problem and failing to materialize all of it.

When the broader challenge is to move towards optimizing workflows, outcomes and user experience at a platform level, then it isn’t really clear how it can be achieved through outside and inorganic data.

While choosing an “off-the-shelf” solution, Rabih points out that you don’t know where the data behind their models comes from. Though many vendors in the market offer AI functionality that solves complex problems related to hiring and managing people, there’s a lot of doubt surrounding where and how the data was obtained and, therefore, whether the resulting AI systems are suitable for solving your problems.

When it comes to Avature’s machine learning and AI strategy, Rabih considers data to be a main pillar and a competitive differentiator because we are building it in-house with heterogeneous and high-quality HR-specific data we’ve gathered working with large enterprises.

“We have access to real data that spans multiple industries, multiple sectors and a long historical period. So that data is an opportunity but also a responsibility for all of the obvious concerns about data privacy and security.”

-Rabih Zbib. Director of Natural Language Processing & Machine Learning at Avature.

Black-Box and White-Box Approaches to AI for HR

Here’s when we come to another issue in many AI strategies: Black-box versus white-box.

Where black-box refers to an opaque approach to AI, in which a user has limited control over the decisions being made by the algorithms, a white-box approach differs by offering users the last word. This means ensuring complete transparency regarding the criteria the algorithm is using to provide a suggestion and allowing them to adjust the factors considered.

In this sense, Rabih stresses that it’s important to keep the customer informed about what the system is recommending and about how it is forming its recommendations in order to support decision-making rather than having the system make the decisions for them.

At Avature, we offer a platform in which AI is embedded in the architecture. Besides, our experts building the AI engine at the core and applying AI-powered tools in different parts of the platform are just a phone call away, available to explain in detail how everything works.

All in all, it’s essential to have a clear message and strategy to understand where AI is being applied, what’s being tracked and what you’re able to do. If not, the technology won’t be suitable for global organizations searching for reliable AI to support their strategies.

When It Comes to Artificial Intelligence in HR, Transparency Is Key

Transparency is one of the main factors here: letting the end-user know what information the system is using. That, in turn, has implications for the technical approach employed.

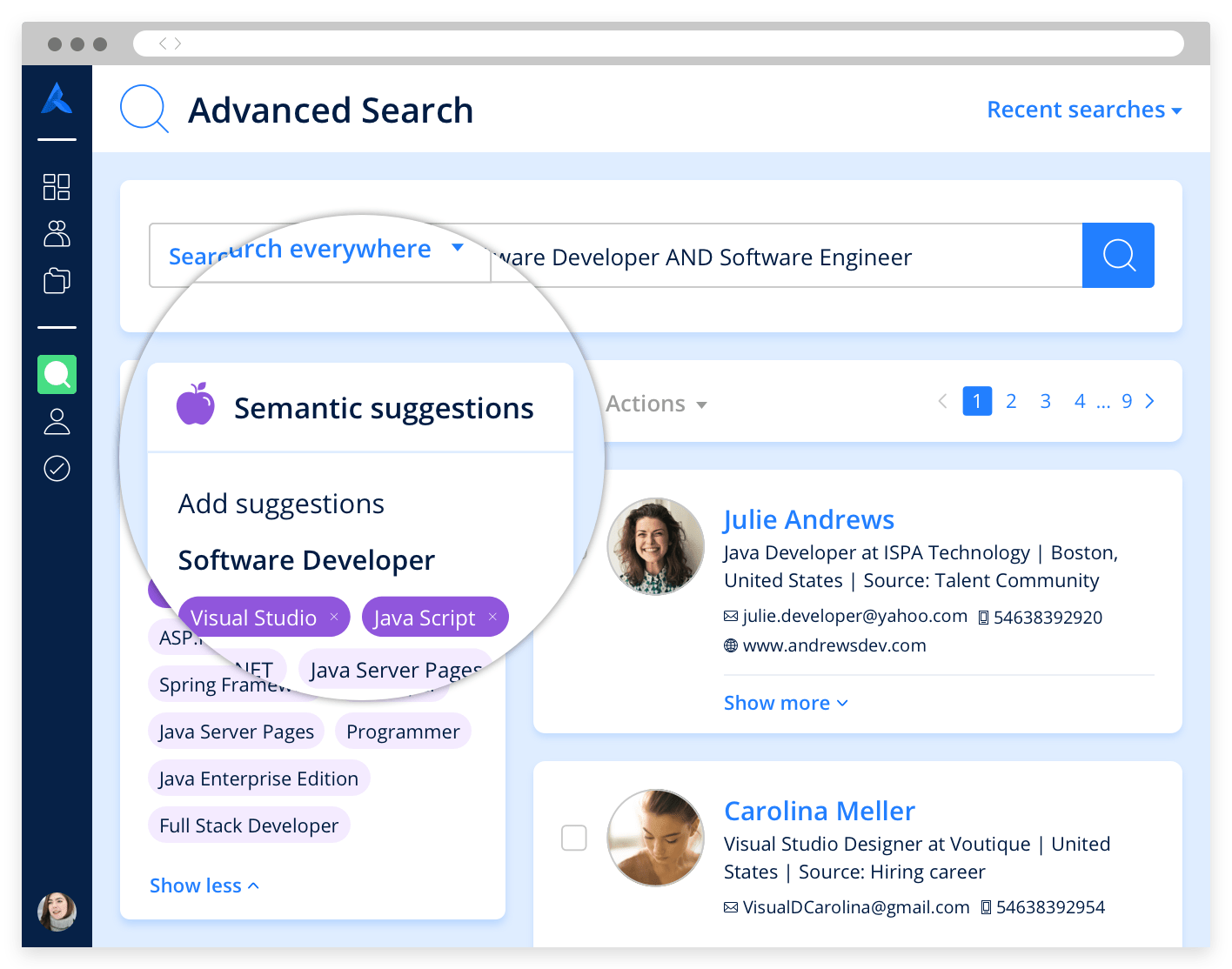

Take, for example, semantic suggestions while searching a talent pool. As the sourcer starts looking for candidates with a specific skill, the engine will prompt the user with term recommendations to expand the search to candidates having relevant neighboring skills.

This way, the user can understand why the system is prompting a specific suggestion and, at all times, dismiss recommendations if they’re not relevant.

Avature’s advanced search feature, displaying semantic suggestions as the user types keywords and showing candidate results.

“How do you build that, respecting the privacy of the users and do so in a way that avoids bias?”

This should be a main point to keep present during strategy deployment. If the vendor you choose to work with is building its AI capabilities organically through a white-box approach, Rabih mentions that it’s likely that they’re using relevant data that respects privacy and security.

This also means that the process for mitigating bias can start in the early development stages, and due to transparency, it’s easier to trace bias throughout the process.

On the contrary, an opaque black-box approach risks replicating errors and bias systemically and making it tremendously difficult to track and fix problematic input and calculations. Data privacy and bias are both real concerns that are always present when formalizing an AI strategy.

Avoiding Bias

We can’t talk about artificial intelligence in HR without touching on bias. This has been a main concern for companies for a while; just look at what happened with GPT-3. And with complex regulations, such as the New York City Act requiring annual bias audits of AI-powered hiring technology, appearing around the globe, it’s essential to understand exactly how AI is working.

Related to the technical approach, Rabih points out the importance of keeping an eye out on the kind of information and data being used, as well as the algorithms.

A typical use case that he mentions as an example of where bias can occur is in recommendations. This could be in matching or filtering. So why does this happen and how can we work to avoid it?

Explicit Bias

In order to build these models, there are steps that can be taken to try and avoid unwanted and unintentional bias.

For cases related to explicit bias, Rabih mentions a series of more “obvious” actions he and his team take to ward it off, such as not using gender, personal information or race to train the models. They don’t use personal traits either, such as voice, speech quality or facial recognition.

Implicit Bias

When it comes to implicit bias, Rabih suggests building a semantic representation of candidates and jobs based on the skill required, rather than using historical data (meaning human-made decisions during a specific moment in time). Why? Because there’s a chance these historical decisions could contain bias, which trickles into your current model.

In contrast, with semantic representations, similarities can be measured between what you’re looking for and what your candidate or job database contains, therefore allowing for recommendations to be made without the bias that might be contained in the historical data.

The Avature Approach to Artificial Intelligence for HR

AI is part of the Avature architecture, and our roadmap reflects that with the technology being implemented at several levels.

Level One

The first level deals with task automation. Eight years ago, we introduced a resume parser to the platform, built to understand several languages, and automatically extract relevant personal information to populate a Person Record within the platform.

The system is also capable of detecting similar records, allowing the combination of them to avoid duplicates. This saves time for the user, who no longer has to perform this task manually.

We are continuously improving the resume parser. For instance, we’ve expanded our ability to parse resumes and extract information in Bulgarian, Croatian, Estonian, Finnish, Latvian, Lithuanian, Polish, Romanian, Slovak and Slovenian. These have joined the list of 17 languages that are already supported by our resume parser engine.

Level Two

The second level is intended to deal with information at scale, helping the user out by augmenting their knowledge. Here, we can find semantics and matching.

These capabilities are able to process huge quantities of data and display what is relevant much faster than a human could, as well as notice patterns that humans may not. It’s all about delivering the right information at the right time.

Skills taxonomy and semantics have also come into play at this level. This capability is constantly being expanded as the topic of skills is gaining momentum in the talent acquisition community every day.

Such a task is not scalable for humans to perform but is a perfect use case of AI for HR. Rabih points out that through machine learning, we’re able to take the data we have and learn how skills relate to each other and to jobs, as well as infer jobs from skills and vice versa.

Level Three

The third level is related to predictive analytics and intelligence in the platform itself. Agility and flexibility are the main properties of this level. As Rabih notes of the Avature platform itself: “There are no two instances that are the same.”

So, the next frontier in our AI strategy is related to improving the user experience for each individual, making sure outcomes are positive, and ensuring the process as a whole is as well.

In our platform, in particular, one of the examples Rabih mentions is wanting to help the user optimize the usage of workflows and how to put them together. This, he says, can be done by utilizing techniques like reinforcement learning, where the user can be modeled as the agent in a specific environment and by taking note of interactions with the platform, guide them through it all.

The limits of technology are constantly expanding. In this sense, Rabih predicts that a big focus moving forward will be on evolution and figuring out how technology can be used to work with us.

“It’s exciting to be taking part in that and have the opportunity to help shape the future.”

-Rabih Zbib. Director of Natural Language Processing & Machine Learning at Avature.