For organizations looking to deliver a high-touch candidate experience, intelligent job recommendations have become a key capability of career sites to match visitors with relevant opportunities. And the same technology provides many benefits for recruiters too, by allowing them to speed up the process of identifying qualified candidates.

From skills to educational background, systems with matching functionalities can consider multiple variables to establish compatibility between candidates and open positions. However, past experience, and specifically the similarity between job titles, is one of the essential factors.

Building on its skill semantics capabilities, our Machine Learning research team has developed an innovative approach to tackle semantic similarity between job titles that relies on unsupervised representation learning.

We sat down with our resident AI expert Rabih Zbib, Director of Natural Language Processing & Machine Learning, to discuss the most noteworthy points of that work, published in the research paper “Learning Job Titles Similarity from Noisy Skill Labels.” From how the model is trained and how it works to the benefits it brings for Avature and our customers, read on to learn more about the robust AI engine that powers our platform.

What is Newsworthy About This Approach?

We wanted our model to compare two job titles and deduce how similar they are. The conventional solution for this objective is to develop a supervised model trained on a very large data set of job title pairs, manually annotated by humans who define whether or not they are similar to each other. But because annotating a data set big enough is a slow and rigid process, our ML researchers took a different approach to training the model using semantic representations of the job title.

We’ll go into the technical details of the model later, but first, the resulting model has two key characteristics that make it stand out among other approaches in the market:

- It relies on unsupervised learning — a truly revolutionary method in AI —, making it more generalizable, cost-effective and easier to extend with more data because it doesn’t require human annotations.

- Because of this, it enables faster development and deployment, which delivers significant short and long-term value to our customers.

Why Go in This Direction?

The driving factor behind innovation at Avature is to create value for customers by delivering powerful tools that enhance their talent processes. And in this case, it’s no different. We chose to pursue this approach because of the numerous benefits that it delivers for our customers:

- The use cases that we empower through AI save our customers’ recruiters significant time and overall enable them to enhance the experiences of every stakeholder involved in talent-related processes. As we bring in new models to strengthen our cross-platform AI functionalities and deploy them quickly, we also ensure that our customers continue to reap those benefits.

- Because the model operates on the semantic level, it allows job titles to be compared intelligently and not simply based on superficial keyword matching. Moreover, the model can generalize, which means it can predict similarity even for job titles that were not included in the data the model was trained on. This is a powerful benefit because, in the world of work, new job titles appear all the time.

- Being able to build our ML model without human annotation enables us to update it more rapidly, preventing the model from becoming stale. As the labor market changes, our ability to make periodic updates to our core AI technology ensures our customers always receive accurate results, avoiding the detrimental impact of model drift.

- The semantic representations used to train the job title model are language-independent. Using this approach, we have developed our functionality in French, German, Spanish and Italian in addition to English, with many more languages on our roadmap. For our global customers operating in many different countries, the ability to consistently leverage our AI functionalities in multiple languages is incredibly valuable.

How the Model Works

In our previous blog on skill semantics, we described how skills are modeled as vectors, or points, in a high-dimensional semantic space. We explained how that space, which is constructed using a neural network model trained on real-world data, enables us to measure the degree of similarity between skills based on the distance between their corresponding points in that space. The semantics of the skills are represented through these vectors (referred to as embeddings), in what is called representation learning.

Now, going back to job titles. The insight the Avature ML team had is that a representation of the job title can be obtained from the representations of the skills related to them. For that purpose, they extracted skills from millions of job requisitions and the work experience sections of anonymized resumes, and then associated them with the related job titles.

That was done over a very large data set, and the skills extracted from different data records but corresponding to the same job title were combined together, helping keep track of how many times a skill was found with the same job title. The result looked something like this:

Director of Communications: { “Public Relations”: 135, “Social Media”: 128, “Campaigns”: 93, “Writing”: 55, “Strategy”: 18, … }

Figure 1. Obtaining the representation of a job title from the related skills.

A semantic representation of the job title can then be obtained by averaging the embedding vectors of all the related skills and using the counts as weights to reflect the importance of each skill. This representation could be used directly to compare job titles.

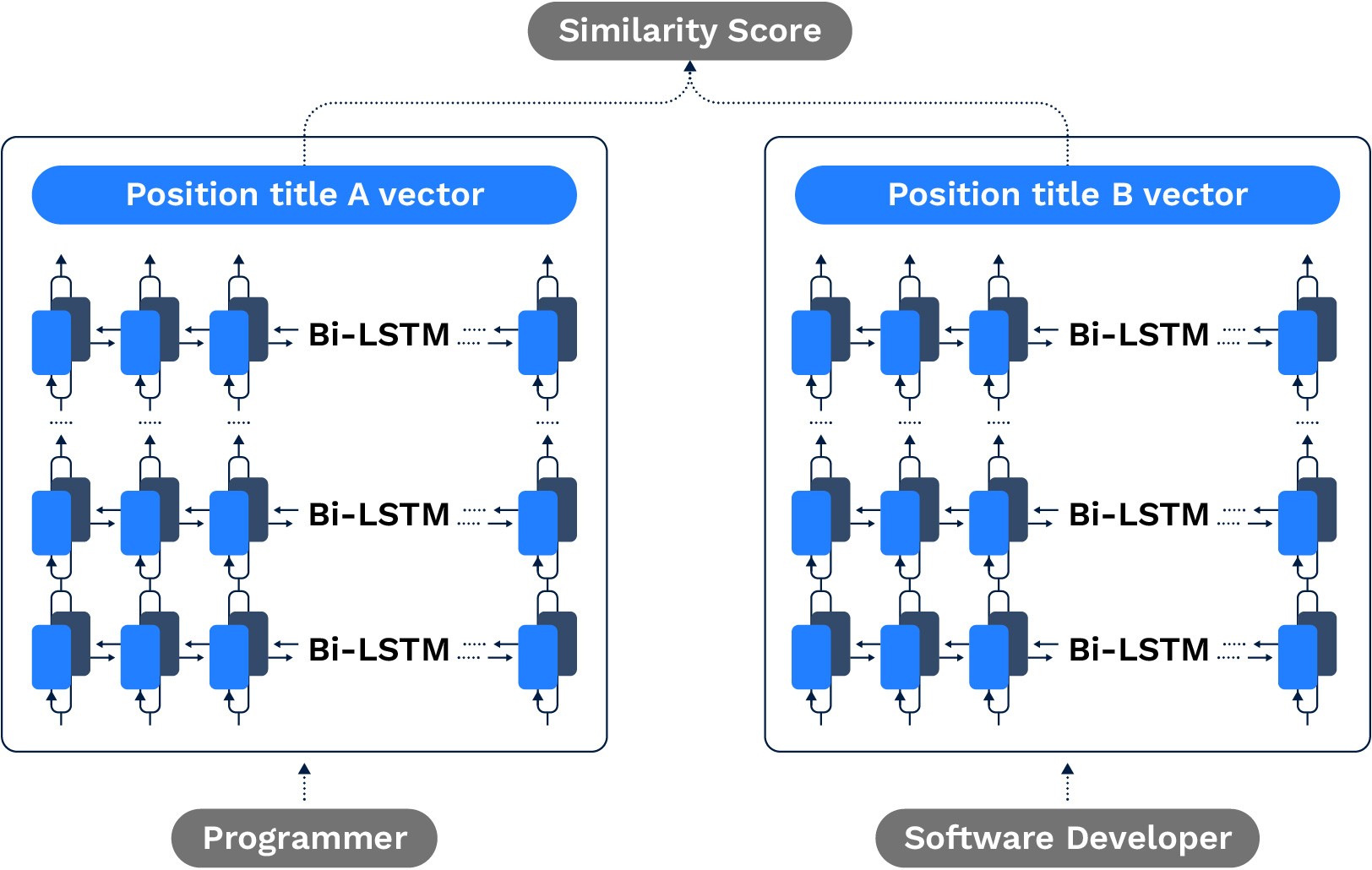

Figure 2. Example of the Job Titles model in use. The recurrent neural networks produce an embeddings vector for each title, capturing its semantics. Two vectors are then compared to determine the similarity between their corresponding embeddings.

But that would be limited to the job titles seen enough times in the training data. To ensure the model’s ability to generalize, we wanted to go further. So instead, our researchers used these as ideal representations of the job titles (or “objectives” in AI terminology)and trained a recurrent neural network (or RNN) to mimic that ideal representation.

Once trained, the RNN can then produce such a representation from the job title. This includes new job titles that have not been seen in the training data.

Another important feature of this model is that it does not need the related skills to produce the embedding of a job title. The related skills are only used to train the model. And so, the team achieved the goal we set up for ourselves: A model that can compare two job titles based on their semantics and has remarkably been trained using unsupervised representations instead of the slow and costly manual annotations.

Using the semantics of skills as representations of the job titles also allows us to overcome the longest bottleneck in AI development, which is data. Even though skills are expressed in a particular language, let’s say English, as concepts, they are language-independent. “Public Relations” is still related to “Social Media,” whether those skills are expressed in English or German. This means that to obtain training data in a new language, all we have to do is translate the job titles of that data and reuse the semantic representation as is. Thanks to this property, we can train the AI models in new languages and offer our AI capabilities in those languages in record time.

Development for Transparency and Flexibility

Tools such as ChatGPT have propelled AI into the mainstream in a way we’ve never seen before. But conversations and concerns around this technology in recruiting are not new. In fact, these concerns are driving increased regulation in this space. For example, New York City passed a law that will require employers to conduct bias audits on automated employment decision tools, including those that utilize AI.

Understanding how important it is for our customers to ensure unbiased and fair talent processes, we do not train our native AI models using personal information, such as race, age, place of birth and gender, among others. We also avoid implicit bias by not resorting to historical data of human decisions to train our AI models. Instead, our approach is representation-based, where we model the semantics of attributes of the candidate’s profile, like skills or the job title. Our method for training the job titles model fits into this philosophy.

But the premise of transparency goes beyond model training. Using a representation-based model for each different type of attribute enables our white-box approach to AI, giving Avature users complete visibility into and control over the system’s inner workings so that their decision-making is enhanced but not replaced.

First with skill semantics and now with job titles similarity, we strive to develop ML models that power impactful solutions to streamline our customers’ recruiting and talent management programs. Most importantly, our objective is to develop flexible technologies that can keep up with the challenges coming down the line.

Years ago, we decided to build our AI algorithms natively. This has allowed us to collaborate closely with our customers to innovate in line with their visions and needs. And for them, it means having full access to the experts behind the models, in contrast to what could occur with vendors that outsource their AI development. If you too would like to speak to our specialists and learn more about our ML models and overall AI capabilities, get in touch with us.

If you would like to read the full paper, please find the link below:

Rabih Zbib, Lucas Lacasa Alvarez, Federico Retyk, Rus Poves, Juan Aizpuru, Hermenegildo Fabregat, Vaidotas Simkus and Emilia Garcıa-Casademont, 2022, Learning Job Titles Similarity from Noisy Skill Labels, arXiv: 2207.00494 [cs.IR]